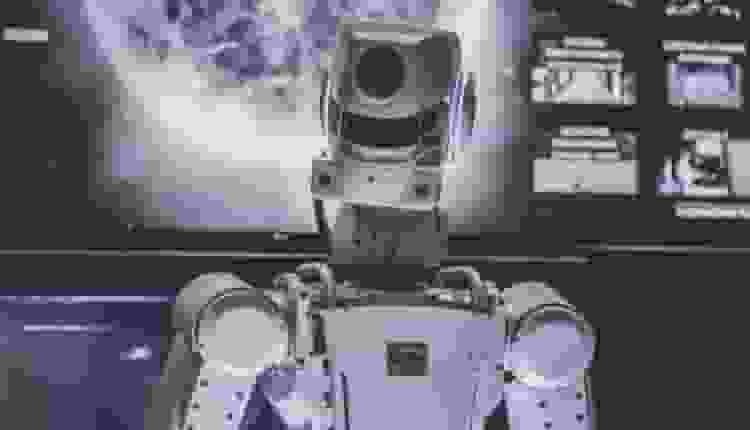

Some of the most well-known figures in technology have expressed concern that artificial intelligence may result in the extinction of humanity.

Artificial intelligence (AI) should be prioritized alongside other extinction hazards like nuclear weapons and pandemics, according to a startling statement signed by international academics.

Experts Highlight AI as a Priority alongside Nuclear War and Pandemics

Many researchers, senior executives at firms like Google DeepMind, the co-founder of Skype, and Sam Altman, CEO of ChatGPT-maker OpenAI, are among the signatories.

Geoffrey Hinton, referred to as the Godfather of AI, is another signatory. He recently announced his resignation from Google, citing the possibility that bad actors may utilize new AI technology to harm others and bring about the extinction of humanity.

“Mitigating the risk of extinction from AI should be a worldwide priority along with other societal-scale hazards such as pandemics and nuclear weapons,” the succinct statement reads.

The yet-to-be-peer-reviewed study, which was published in the arXiv preprint service, hypothesized that AI might be the Great Filter solution to the Fermi paradox, having the power to eradicate sentient life from the universe before it can communicate with other species.

The Fermi Paradox, whose catchphrase is “Where is everyone? Scientists have been baffled by for decades. It alludes to the unsettling notion that if extraterrestrial life is likely to exist in the universe, why haven’t we found it yet.

Many ideas have been put forth, each presenting a unique justification for our thus far unique existence in the universe.

Read more: Meta: Licensing Talks With Magic Leap’s AR Technology, According To Reports

Examining the Threat to Civilization

There is still a perplexing cosmic stillness despite probability calculations, including the well-known Drake Equation, suggesting there may be a number of intelligent civilizations in the galaxy.

The Great Filter, a well-known theory, postulates that some event necessary for the development of sentient life is incredibly unlikely, explaining the cosmic quiet.

This theory’s logical counterpart is that the universe’s expansion of life is probably being constrained by some catastrophic cosmic catastrophe.

According to the new research, the development of AI might be the precise type of catastrophic risk event that has the ability to destroy whole civilizations.

Dr. Bailey places the Great Filter’s setting inside the study’s discussion of the potential long-term damage posed by poorly understood technology like AI.

Read more: Revolutionary Breakthrough: Paralyzed Man Regains Mobility Through Brain Signal Technology